“AI won’t just support workflows. It will own them. The shift has already begun—from tools that assist to agents that act.”

A Paradigm Shift in Product Thinking

We’re at the cusp of a fundamental shift in how we think about software. For years, we’ve designed tools: applications that respond to user input, making tasks faster, easier, or more delightful. But with the rise of Generative AI and large language models (LLMs), we’re no longer just building tools—we’re building agents.

Agents don’t just wait for instructions. They reason – act – collaborate and proactively identify goals, choose the right paths, adapt to context, and handle uncertainty. Unlike tools, which serve as responsive utilities, agents serve as proactive collaborators.

This blog explores how AI product creators can transition from building passive tools to designing active agents—and why that evolution isn’t just about user experience. It’s about reshaping the architecture, the feedback model, the success metrics, and even the culture of enterprise software.

Tools vs. Agents: A Philosophical and Practical Divide

The shift from tools to agents marks a foundational change in how we conceptualize and engineer software. Traditional tools follow imperative logic—they wait for user commands, execute within pre-defined boundaries, and rely on rule-based workflows. These systems assume the user has context, knows their goal, and simply needs a means to act.

Agents, by contrast, operate on declarative intent. They interpret ambiguous inputs, infer goals, and autonomously decide next steps based on context, memory, and learned patterns. Rather than acting as passive instruments, agents behave like cognitive systems capable of perception, planning, and self-directed execution.

From an architectural lens, tools are typically stateless—they process inputs in isolation, with little memory of past interactions. Agents, however, are inherently stateful. They build on interaction history, retrieve relevant information dynamically, and adapt their behavior through learned experience. Their functioning depends not only on LLMs but also on memory stores, retrieval systems, fine-tuned models, and prompt-driven control flows.

In practical terms, a tool might generate a filtered supplier list. An agent, on the other hand, would analyze past transactions, detect anomalies, assess contract compliance risk, and proactively recommend renegotiation—all without being explicitly instructed.

This difference is not just functional—it’s architectural. Tools are typically UI-first and logic-bound, while agents are model-first, orchestrated, and grounded in hybrid AI stacks that combine symbolic and sub-symbolic computation. They use components like vector databases, tool routers, policy layers, and reasoning graphs to operate intelligently in complex environments.

Critically, agents don’t just deliver answers—they identify the right questions. They offload cognitive effort from users and act as strategic collaborators. This evolution isn’t incremental—it redefines software from static tools to adaptive, goal-seeking systems.

Why This Shift Matters in Enterprise SaaS?

Enterprise SaaS platforms have long been optimized for standardization, compliance, and process control—delivering value through deterministic workflows, form-based inputs, and rules-based logic. But that paradigm is fraying at the edges. In today’s enterprise, users are inundated with real-time data, fragmented signals, and fast-moving decisions that span functional silos. The result is cognitive overload and operational drag—challenges that traditional tools were never designed to solve.

Tools presume the user knows what question to ask, how to filter data, and which levers to pull. But in high-context environments like procurement, finance, and supply chain, users often don’t know what they’re missing. Agents resolve this by reasoning over context, identifying patterns, and surfacing insights before a request is made. They move from being passive instruments to cognitive collaborators.

This shift offers powerful business value. First, agents reduce decision latency by continuously monitoring conditions and autonomously surfacing anomalies, risks, or opportunities. Second, they democratize intelligence—bridging technical and non-technical users through natural language interfaces. Third, they enable systems that learn over time, using embedded feedback loops to adapt and evolve without explicit reprogramming. And finally, agents support scale and localization without complexity—offering adaptive, personalized workflows without endless configuration overhead.

Technically, this also reframes the SaaS stack. Where traditional applications were built on static logic layers, agentic systems require orchestration of LLMs, retrieval pipelines, vector stores, and real-time monitoring infrastructure for bias, drift, and hallucination control. The platform becomes an evolving system, not a fixed product.

Strategically, the shift from tools to agents transforms how enterprise software is judged. It’s no longer about feature checklists—it’s about outcome alignment, intelligence density, and adaptability under change.

The Stack Behind Agents: More Than Just LLMs

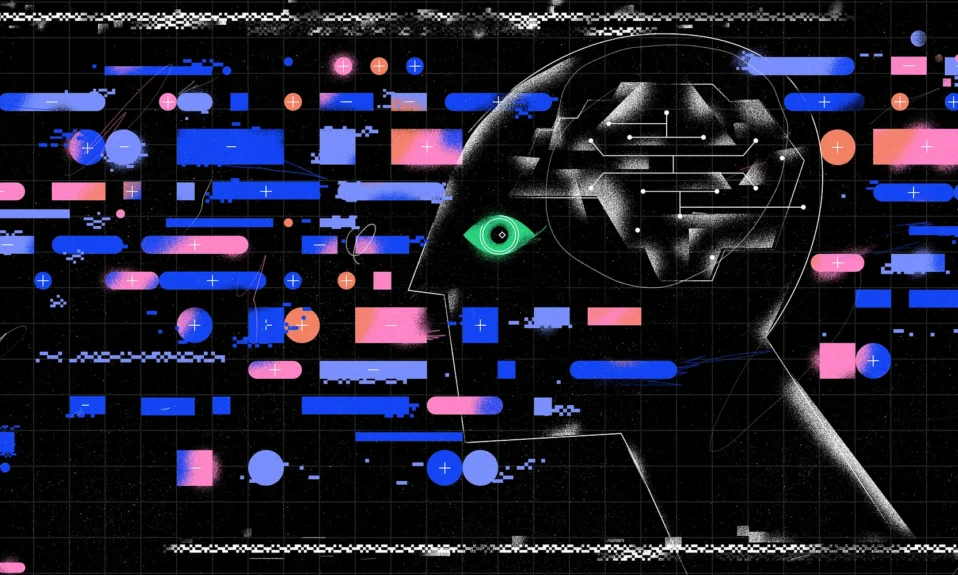

While large language models (LLMs) like GPT-4, Claude, and Gemini provide the linguistic and reasoning core of AI agents, they represent just one layer in a much deeper architecture. An LLM on its own is stateless—it can interpret prompts and generate output, but it lacks persistent memory, domain grounding, or the ability to act. Turning a model into an enterprise-grade agent requires a coordinated system of supporting components.

The first layer beyond the LLM is Retrieval-Augmented Generation (RAG), which allows agents to access real-time, domain-specific data from vector stores, databases, or APIs. This retrieval step helps ground responses in current and trusted knowledge, reducing hallucinations. Meta’s REALM and OpenAI’s RAG pipelines have shaped this pattern.

To maintain continuity and personalization, agents also rely on long-term memory systems—often powered by vector databases like Pinecone, Weaviate, or FAISS—which store embeddings of past interactions and enable semantic recall using similarity search.

Agents must also plan and act. Frameworks like LangChain, AutoGen, and CrewAI provide orchestration layers for managing multi-step plans, tool calls, and interaction flows. These systems blend LLM reasoning with classical AI techniques like hierarchical task networks (HTNs) and graph-based execution logic.

Through a tool execution interface, agents can act on insights—whether updating CRMs, querying financial systems, or triggering workflows. OpenAI’s Function Calling and LangChain’s ToolRouter power this integration.

Critically, enterprise agents must operate safely. Governance layers monitor latency, prompt injections, and policy violations. Tools like Guardrails.ai and moderation APIs support observability and compliance.

Finally, feedback loops capture both explicit and implicit user responses to adapt agent behavior—enabling continuous learning through fine-tuning, prompt adjustment, or model refinement using adapters like LoRA or QLoRA.

Moving Beyond MVP: Toward MAPs (Model-Aware Products)

The MVP (Minimum Viable Product) philosophy—test fast, iterate faster—has served startups and enterprise teams for decades. But when it comes to AI-native products, especially agents, MVP thinking begins to break down.

Why? Because MVPs assume deterministic systems. You build, test, and get repeatable outputs. AI systems, especially those based on LLMs, are inherently probabilistic. The same prompt might yield different results. User experience may vary based on context, inputs, or unseen model biases. In such systems, controlling outcomes isn’t about predefining rules—it’s about shaping behavior.

Enter the concept of Model-Aware Products (MAPs). A MAP isn’t just a lean version of your product—it’s an intelligent layer that is aware of the model’s architecture, its training data limitations, its performance patterns, and how it interacts with real-time data. It includes guardrails, observability tools, prompt versioning mechanisms, fallbacks, and pathways for human-in-the-loop interventions.

In building a MAP, the product lifecycle looks different. It begins with prompt engineering and model grounding, continues into performance benchmarking using hallucination rates and precision metrics, and evolves with continuous tuning based on real usage. A/B testing, in this world, doesn’t test layout variations—it compares prompt effectiveness or retrieval accuracy. Success isn’t just about clicks or time-on-page—it’s about user trust, clarity, and decision confidence.

This redefinition of product management requires a shift in team capabilities too. PMs must understand model behaviors, data scientists must think in terms of end-user impact, and designers must craft flows not just for interaction—but for interpretation, explanation, and recourse.

Designing for Trust: Building Human-Compatible Agents

No matter how smart an agent becomes, if users don’t trust it, they won’t use it. Trust becomes the currency of agent adoption—and designing for it is both an art and a science.

Building trust in AI-native products requires visibility into how agents reason, why they make the recommendations they do, and what boundaries govern their behavior. Unlike traditional software, where outputs are either right or wrong, agentic outputs live in shades of plausibility. As such, they must offer transparency into their decision-making process. This might mean surfacing the sources used in RAG, showing intermediary reasoning steps, or offering a “why” behind every “what.”

Equally important is control. Users must feel empowered to override or correct agents. This is not just about checkboxes or settings—it’s about designing interfaces where humans can intervene, provide feedback, and train the system in real time. Trust is built when users feel they are co-piloting—not being overruled.

Boundaries matter too. Designers and developers must embed clear constraints or guardrails into the system whenever agents propose actions with financial, legal, or operational consequences. These constraints should be configurable, visible, and enforceable—not abstract.

Designing for trust means that every agent interaction needs to earn credibility. Not once, but continuously. Product teams must monitor trust erosion points, listen for friction signals, and proactively adapt. In this way, trust becomes a product feature—designed, deployed, and iterated like any other.

Avoiding Common Pitfalls

As more teams race to embed AI in their workflows, some common traps consistently emerge. The first is over-indexing on LLM capabilities without integrating domain grounding. A general-purpose agent may dazzle in demos but flounder in real-world contexts if not backed by curated, contextual data pipelines.

Another frequent error is treating agents as fixed logic systems rather than adaptive ones. Without robust feedback mechanisms, agents grow stale and disconnected from user expectations. Even worse, agents that learn the wrong lessons—because of biased feedback or sparse correction—can become misaligned, losing relevance or trust.

Teams also underestimate the operational overhead of maintaining agents. Unlike tools, which can remain static, agents require continuous monitoring, tuning, and governance. Teams must version, audit, and continuously align agents with evolving business processes.

Finally, skipping user onboarding is a silent killer. Agents are a new interaction model. Users need guidance on what to expect, how to query, when to trust, and how to course-correct. Even powerful agents often go underutilized or get misused without proper onboarding and user guidance.

Avoiding these pitfalls requires a mindset shift—from deploying functionality to curating behavior, from shipping code to shaping cognition.

Final Thoughts

From dashboards to decision-makers, from workflows to reasoning agents, we’re witnessing a transformation not just in technology—but in how products think, learn, and serve. AI-native products aren’t just smarter interfaces. They’re systems that perceive, adapt, and evolve.

As product leaders, we must embrace this complexity. We must learn to speak the language of models, to understand feedback systems, and to craft interfaces that invite trust without surrendering control. Our users no longer just need faster tools—they need intelligent partners.

The agent era isn’t a trend. It’s the next foundation.

Recommended Reading & Tools

- Stanford HAI: Foundational Agents

- Google DeepMind: Principles for Trustworthy Agents

- OpenAI Cookbook – RAG